Join us for a free hands-on tour of #BigData’s cutting edge next Wed @hackreduce #boston: YARN, Impala, Spark, etc. http://ow.ly/JwA2V

Slides and replay of my “Using R with Hadoop” webinar now available #rstats #hadoop

January 25, 2013 — Jeffrey BreenI owe a big “thank you” to all of you who attended my webinar yesterday “Using R with Hadoop”. Revolution Analytics partnered with us at Think Big Analytics to produce the webinar, and I owe them thanks as well.

For those of you who missed it, the slides and replay are now available from Revolution Analytics.

How to specify a MapR distro when launching Elastic MapReduce clusters with the Ruby CLI

January 9, 2013 — Jeffrey BreenAmazon’s Elastic MapReduce Ruby client allows you to specify which of the supported Hadoop distributions to use, currently either Amazon’s Apache 1.0.3-based distribution or MapR’s M3 and M5 editions.

I found the CLI’s option documented at <http://docs.aws.amazon.com/ElasticMapReduce/latest/DeveloperGuide/emr-mapr.html>:

To launch an Amazon EMR job flow with MapR using the CLI

Set the –with-supported-products parameter to either mapr-m3 or mapr-m5 to run your job flow on the corresponding version of the MapR Hadoop distribution.

The following example launches a job flow running with the M3 Edition of MapR.

elastic-mapreduce –create –alive \

–instance-type m1.xlarge –num-instances 5 \

–with-supported-products mapr-m3

For additional information about launching job flows using the CLI, see the instructions for each job flow type in Create a Job Flow.

Slides from “Tapping the Data Deluge with R” lightning talk #rstats #PAWCon

October 2, 2012 — Jeffrey BreenHere is my presentation from last night’s Boston Predictive Analytics Meetup graciously hosted by Predictive Analytics World Boston.

The talk is meant to provide an overview of (some) of the different ways to get data into R, especially supplementary data sets to assist with your analysis.

All code and data files are available at github: http://bit.ly/pawdata (https://github.com/jeffreybreen/talk-201210-data-deluge)

The slides themselves are on slideshare: http://bit.ly/pawdatadeck (http://www.slideshare.net/jeffreybreen/tapping-the-data-deluge-with-r)

Slides from today’s Big Data Step-by-Step Tutorials: Infrastructure series and Intro to R+Hadoop with RHadoop’s rmr

March 10, 2012 — Jeffrey BreenHere are my presentations from today’s Boston Predictive Analytics Big Data Workshop.

All code and config files are available at github: https://github.com/jeffreybreen/tutorial-201203-big-data

My portion of the workshop was divided into four parts, three focusing on different infrastructure scenarios and ending with a deep dive into the rmr R package:

Big Data Step-by-Step: Infrastructure 1/3: Local VM

-

Starting small. Just because Big Data tools like Hadoop were designed to run at “web-scale,” across many nodes, doesn’t mean you need to build a cluster—or even dedicate a single machine—to get started. In this deck we download and install a virtual machine from Cloudera which comes complete with a functioning, single-node Hadoop installation. As long as you restrict the size of your data set appropriately, this is great way to become accustomed to Hadoop and its tools. We walk through running a Hadoop Streaming job to make sure everything works. We later use this same VM to spawn a Hadoop cluster in the cloud (see part 3).

Big Data Step-by-Step: Infrastructure 2/3: Running R and RStudio on EC2

Not everyone has Big Data. Some of us have an occasional need to analyze a data set larger than comfortably fits in our existing analysis environment either due to disk, CPU, or memory constraints. For these times, launching a single, large machine in the cloud may fit the bill. This part of presentation walks through how to launch just such a machine using Amazon’s EC2 cloud computing platform. Since I tend to run R and RStudio on Linux, that’s the focus of this tutorial, but the general outline may be helpful to others as well.

Big Data Step-by-Step: Infrastructure 3/3: Taking it to the cloud… easily… with Whirr

Scale up using the cloud. The Apache Whirr cloud management tool makes it easy to launch a Hadoop cluster on EC2. We use the Cloudera VM from presentation #1 as a launching point for the cluster and, thanks to a Whirr-generated proxy script, submit jobs and fetch results from our local VM just as before. For extra credit, we see how Whirr can save us money by bidding for excess capacity via EC2’s spot instances.

Big Data Step-by-Step: Using R & Hadoop (with RHadoop’s rmr package)

Crunching Big Data with R. Originally a Java-only ecosystem, Hadoop Streaming allows the creation of mappers, reducers, and combiners in any language which can handle stdin and stdout—but that doesn’t mean you want to have to write code to manage I/O at that level. After a quick (and undoubtedly incomplete) survey of Hadoop-related R packages, we walk through some of the abstractions and features of RHadoop’s rmr package which make it easier for R developers to get started. We walk through a sample mapper and reducer, demonstrating and documenting the native R objects which carry the data from step to step.

Thank you to the session’s sponsors, all the speakers, and to an interesting and engaged audience. Special thanks to John Versotek for arranging such an informative and enjoyable day, and for the opportunity to take part.

Use geom_rect() to add recession bars to your time series plots #rstats #ggplot

August 15, 2011 — Jeffrey BreenZach Mayer’s work reproducing John Hussman’s Recession Warning Composite prompted me to dig this trick out of my (Evernote) notebook.

First, let’s grab some data to plot using the very handy getSymbols() function from Jeffrey Ryan’s quantmod package. We’ll load the U.S. unemployment rate (UNRATE) from the St. Loius Fed’s Federal Reserve Economic Data (src="FRED") and load the time series into a data.frame:

unrate = getSymbols('UNRATE',src='FRED', auto.assign=F)

unrate.df = data.frame(date=time(unrate), coredata(unrate) )

Now FRED provides a USREC time series which we could use to draw the recessions. It’s a bit awkward, though, as it contains a boolean to flag recession months since January 1921. All we really want are the start and end dates of each recession. Fortunately, the St. Louis Fed publishes just such a table on their web site. (See the answer to “What dates are used for the US recession bars in FRED graphs?” on http://research.stlouisfed.org/fred2/help-faq/.) Sometimes it’s still easier to cut-and-paste (and the static table covers another 64 years, go figure):

recessions.df = read.table(textConnection(

"Peak, Trough

1857-06-01, 1858-12-01

1860-10-01, 1861-06-01

1865-04-01, 1867-12-01

1869-06-01, 1870-12-01

1873-10-01, 1879-03-01

1882-03-01, 1885-05-01

1887-03-01, 1888-04-01

1890-07-01, 1891-05-01

1893-01-01, 1894-06-01

1895-12-01, 1897-06-01

1899-06-01, 1900-12-01

1902-09-01, 1904-08-01

1907-05-01, 1908-06-01

1910-01-01, 1912-01-01

1913-01-01, 1914-12-01

1918-08-01, 1919-03-01

1920-01-01, 1921-07-01

1923-05-01, 1924-07-01

1926-10-01, 1927-11-01

1929-08-01, 1933-03-01

1937-05-01, 1938-06-01

1945-02-01, 1945-10-01

1948-11-01, 1949-10-01

1953-07-01, 1954-05-01

1957-08-01, 1958-04-01

1960-04-01, 1961-02-01

1969-12-01, 1970-11-01

1973-11-01, 1975-03-01

1980-01-01, 1980-07-01

1981-07-01, 1982-11-01

1990-07-01, 1991-03-01

2001-03-01, 2001-11-01

2007-12-01, 2009-06-01"), sep=',',

colClasses=c('Date', 'Date'), header=TRUE)

Now the only “gotcha” is that our recession data start long before our unemployment data, so let’s trim it to match:

recessions.trim = subset(recessions.df, Peak >= min(unrate.df$date) )

Finally, we use ggplot2’s geom_line() layer to draw the unemployment data and transparent (alpha=0.2) pink rectangles to overlay the recessions:

g = ggplot(unrate.df) + geom_line(aes(x=date, y=UNRATE)) + theme_bw() g = g + geom_rect(data=recessions.trim, aes(xmin=Peak, xmax=Trough, ymin=-Inf, ymax=+Inf), fill='pink', alpha=0.2)

One-liners which make me love R: twitteR’s searchTwitter() #rstats

July 21, 2011 — Jeffrey BreenR reminds me a lot of English. It’s easy to get started, but very difficult to master. So for all those times I’ve spent… well, forever… trying to figure out the “R way” of doing something, I’m glad to share these quick wins.

My recent R tutorial on mining Twitter for consumer sentiment wouldn’t have been possible without Jeff Gentry’s amazing twitteR package (available on CRAN). It does so much of the behind-the-scenes heavy lifting to access Twitter’s REST APIs, that one line of code is all you need to perform a search and retrieve the (even paginated) results:

library(twitteR)

tweets = searchTwitter("#rstats", n=1500)

You can search for anything, of course, “#rstats” is just an example. (And if you’re really into that hashtag, the twitteR package even provides an Rtweets() function which hardcodes that search string for you.) The n=1500 specifies the maximum number of tweets supported by the Search API, though you may retrieve fewer as Twitter’s search indices contain only a couple of days’ tweets.

What you get back is a list of tweets (technically “status updates”):

> head(tweets) [[1]] [1] "Cloudnumberscom: CloudNumbers.com \023 #Rstats gets real in the cloud http://t.co/Vw4Gupr via @AddToAny" [[2]] [1] "0_h_r_1: CloudNumbers.com \023 #Rstats gets real in the cloud via DecisionStats - I came across Cloudnumbers.com . ... http://tinyurl.com/5sjagjg" [[3]] [1] "cmprsk: RT I just joined the beta to run #Rstats in the cloud with cloudnumbers.com http://t.co/lvVp0YJ via @cloudnumberscom http://bit.ly/lbSruR" [[4]] [1] "0_h_r_1: I just joined the beta to run #Rstats in the cloud with cloudnumbers.com http://t.co/lvVp0YJ via @cloudnumberscom" [[5]] [1] "cmprsk: RT man, the #rstats think people I am too soft on #sas, the #sas people think I am too soft on #wps, the #wps pe... http://bit.ly/innEv8" [[6]] [1] "keepstherainoff: Thanks to @cmprsk @geoffjentry and @MikeKSmith for colour-coded #Rstats GUI advice" > class(tweets[[1]]) [1] "status" attr(,"package") [1] "twitteR"

Now that you have some tweets, the fun really begins. To get you started, the status class includes a very handy toDataFrame() accessor method (see ?status):

> library(plyr) > tweets.df = ldply(tweets, function(t) t$toDataFrame() )

> str(tweets.df) 'data.frame': 131 obs. of 10 variables: $ text : Factor w/ 122 levels "CloudNumbers.com \023 #Rstats gets real in the cloud http://t.co/Vw4Gupr via @AddToAny",..: 1 2 3 4 5 6 7 8 9 10 ... $ favorited : logi NA NA NA NA NA NA ... $ replyToSN : logi NA NA NA NA NA NA ... $ created : POSIXct, format: "2011-07-04 13:50:39" "2011-07-04 13:48:10" "2011-07-04 13:29:00" "2011-07-04 13:23:42" ... $ truncated : logi FALSE FALSE FALSE FALSE FALSE FALSE ... $ replyToSID : logi NA NA NA NA NA NA ... $ id : Factor w/ 131 levels "87941406873751552",..: 1 2 3 4 5 6 7 8 9 10 ... $ replyToUID : logi NA NA NA NA NA NA ... $ statusSource: Factor w/ 17 levels "<a href="http://twitter.com/tweetbutton" rel="nofollow">Tweet Button</a>",..: 1 2 3 1 3 4 5 5 3 4 ... $ screenName : Factor w/ 64 levels "Cloudnumberscom",..: 1 2 3 2 3 4 2 5 3 6 ...

You can pull a particular user’s tweets just as easily with the userTimeline() function. Heck, the package even lets you tweet from R if you use Jeff’s companion ROAuth package, but that requires more than one line….

Enjoy!

Use Dropbox’s public folder for web publishing via Notepad (or emacs or…)

July 19, 2011 — Jeffrey BreenRemember The Good Old Days when all you needed to host a web site was a file system and Notepad (or emacs or TeachText)?

Well, I do, and I can’t say that I miss them… until last week when I tried to insert the JavaScript for some motion charts into a WordPress.com post. It’s impossible. Literally. Don’t waste your time. Seriously.

Self-hosted WordPress blogs can use some custom field hackery, but there’s no such option for us easy-way-out WordPress.com users.

Dropbox to the rescue

Just save your HTML page to your “Public” directory in Dropbox and it will get its own public URL which you can find in Dropbox’s context menu:

It’s not the ideal embedding I was hoping for — WordPress.com even strips out iframes — but it’s quick and easy and does the job.

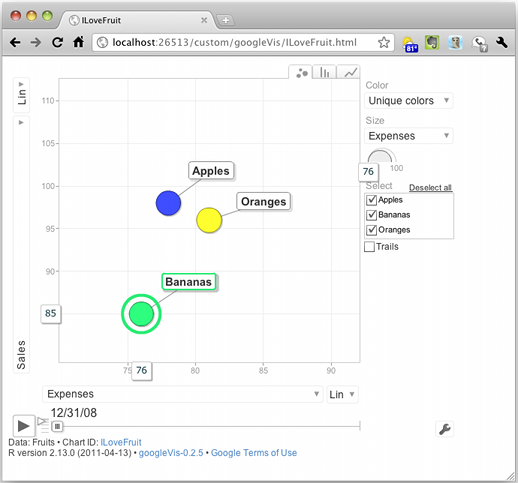

One-liners which make me love R: Make your data dance (Hans Rosling style) with googleVis #rstats

July 14, 2011 — Jeffrey BreenIt may be a cliché, but much of R’s utility comes from its amazing community. And by community, I am specifically referring to the bright, hard-working people who are willing to share their knowledge and code with the rest of us. Because of their contributions, we can do some amazingly cool and useful things with very little code of our own. It is in this context that I launch this new series to highlight packages and functions which make it easy to do jaw-droppingly cool and useful things.

First up: the googleVis package by Markus Gesmann and Diego de Castillo which makes it easy — often with just one-line of R — to harness the Google Visualization API. Annotated timelines, gauges, maps, org charts, tree maps, and more are suddenly at your command.

I’m going to focus on the motion chart, popularized by Hans Rosling in his groundbreaking 2006 TED talk on global economic development. (If you haven’t seen it yet, you should. Right now. Seriously. Go.) Motion charts are an innovative way to display multidimensional time series in an interactive way. And the googleVis package even comes with some sample data to make it even easier to try them out.

The package is available from CRAN if you need to install it.

To get started, load the package and the included “Fruits” data.frame:

library(googleVis) data(Fruits)

This data.frame contains some sample data about sales of various fruits at different locations for different years. There’s even a proper Date column already constructed for us from the numeric Year column:

To make the chart, we need to give the gvisMotionChart() function our data.frame and tell it a few things about it: the column which identifies the items to examine (idvar=Fruit), the time dimension (timevar=Date), and optionally a name to use to identify the chart in the generated HTML and JavaScript (we’ll use chartid="ILoveFruits"):

M = gvisMotionChart(data=Fruits, idvar="Fruit", timevar="Date", chartid="ILoveFruit")

That’s it.

You can view your chart with the overridden plot() function. It will automatically spawn a browser window and serve up your chart through R’s internal web server:

plot(M)

Since WordPress doesn’t allow embedded JavaScript, please click through to see the motion chart in action:

You can also access all 165 lines of the generated HTML and JavaScript and save it to disk:

cat(unlist(M$html), file="output/ILoveFruits.html")

Time suck alert: googleVis may make them easy to create, but motion charts can be a lot of fun to play with. You have been warned…

If you want to take a look at an example with some real data, you might be interested in the 20 Years of the U.S. Domestic Airline Market In 20 seconds post on my work blog.

Finally, here are the slides from my lightning talk on this topic at this month’s Greater Boston useR Group meeting:

Have fun!

installing R 2.13.1 on Amazon EC2’s “Amazon Linux” AMI #rstats

July 8, 2011 — Jeffrey BreenCondensed from this post (and comments) on David Chudzicki’s blog, tweaked, and updated for R-2.13.1.

Assumes you’re starting with a virgin “Amazon Linux” AMI. I picked “Basic 64-bit Amazon Linux AMI 2011.02.1 Beta” (AMI Id: ami-8e1fece7) because it was marked as free tier eligible on the “Quick Start” tab of AWS’s “Launch Instance” dialog box:

$ sudo yum -y install make libX11-devel.* libICE-devel.* libSM-devel.* libdmx-devel.* libx* xorg-x11* libFS* libX* readline-devel gcc-gfortran gcc-c++ texinfo tetex $ wget http://cran.r-project.org/src/base/R-2/R-2.13.1.tar.gz $ tar zxf R-2.13.1.tar.gz && cd R-2.13.1 $ ./configure && make $ # make coffee... or finish your PhD thesis... (yes, it takes that long) [...] $ # finally, if all is well: $ sudo make install $ cd $ R --version R version 2.13.1 (2011-07-08) Copyright (C) 2011 The R Foundation for Statistical Computing ISBN 3-900051-07-0 Platform: x86_64-unknown-linux-gnu (64-bit) R is free software and comes with ABSOLUTELY NO WARRANTY. You are welcome to redistribute it under the terms of the GNU General Public License version 2. For more information about these matters see http://www.gnu.org/licenses/.

As always, refer to the Installation and Administration manual for details and options.

If you want to install RCurl, or anything which depends on it like twitteR, you’ll need to install libcurl & friends first:

$ sudo yum -y install libcurl libcurl-devel